AI Cheat Sheet: Get the Basics Right With These 2 Simple Frameworks

DDVC #41: Where venture capital and data intersect. Every week.

👋 Hi, I’m Andre and welcome to my weekly newsletter, Data-driven VC. Every Thursday I cover hands-on insights into data-driven innovation in venture capital and connect the dots between the latest research, reviews of novel tools and datasets, deep dives into various VC tech stacks, interviews with experts, and the implications for all stakeholders. Follow along to understand how data-driven approaches change the game, why it matters, and what it means for you.

Current subscribers: 10,651, +250 since last week

Brought to you by Affinity - Insights you need to find, manage, and close more deals

Download the 2023 Investment Benchmark Report: European Edition to discover key benchmarks to set your firm up for success. Plus, learn how strong relationship intelligence helps European firms utilise their networks to source, research, and close the highest quality deals.

In the past 6 months, the topic of AI has captured the attention of the masses like never before. With its potential to revolutionize industries, transform daily life, and shape the future, it's no wonder that AI has become a hot topic of discussion. As someone who has been closely involved in this field, I have been frequently approached by numerous individuals seeking my perspective on the AI hype.

Engaging in these conversations, I couldn't help but notice a common trend—many people seem to confuse the fundamentals and mix up various concepts regarding AI. It became evident that amidst the excitement and buzz surrounding AI, there is a need for clear and simple frameworks to help demystify this complex field.

That is precisely the topic of today’s post. My aim is to share a simple AI cheat sheet with two simple frameworks that will assist in understanding the basics of AI, enabling individuals to differentiate between the various components of the AI stack and discern the implications and potential applications more effectively.

By breaking down the complexities of AI into simple frameworks, we can bridge the gap between hype and reality, empowering both technical and non-technical readers to engage in meaningful discussions about AI's impact on our lives and society at large. So, whether you are an AI enthusiast, a curious learner, or a skeptic looking to separate facts from fiction, this blog post is for you.

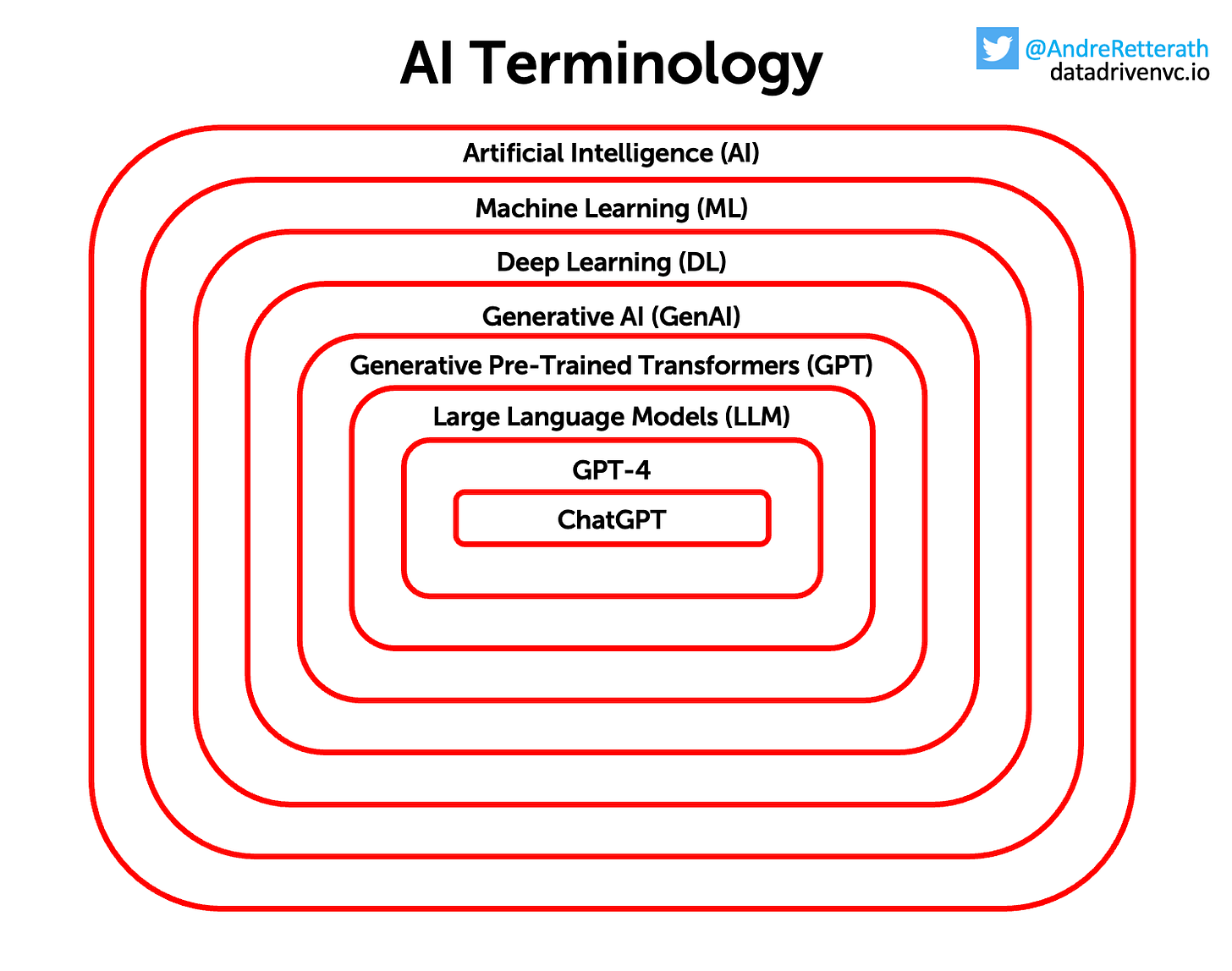

Framework #1 to get the terminology right

The most common mistake is that people confuse the terminology. So let’s get the basics right with the following onion chart and simplified definitions.

Artificial Intelligence (AI) is defined as intelligence demonstrated by machines, as opposed to intelligence displayed by humans or by other animals. "Intelligence" encompasses the ability to learn and reason, to generalize, and to infer meaning.

Machine learning (ML) is a branch of AI that focuses on the use of data and algorithms to imitate the way that humans learn, gradually improving its accuracy.

Deep Learning (DL) is part of a broader family of ML methods, which summarizes algorithms that are structured in layers to create an “artificial neural network”. These algorithms can learn and make intelligent decisions on their own, just like the human brain.

Generative AI (GenAI) refers to a subset of DL models that can learn the patterns of their input training data and then generate high-quality text, images, and other content with similar characteristics.

Generative Pre-Trained Transformers (GPT) is a subset of GenAI models that leverage novel transformer architectures, which excel at capturing long-range dependencies and learning contextual relationships in sequences of data.

A Large Language Model (LLM) is a GPT algorithm that can recognize, summarize, translate, predict, and generate text and other forms of content based on knowledge gained from massive datasets.

GPT-4 is the most recent GPT model version trained and provided by OpenAI. It’s the successor of GPT-3.5, GPT-3, and GPT-2.

ChatGPT is a chatbot interface that allows users to interact with GPT-4 and other versions through natural language instead of code. Democratizing access to GPT models has triggered wide-spread adoption, so essentially ChatGPT can be understood catalyst for the recent hype.

With these few simple definitions, you’re now in a position to get the basics right. Hereof, let’s look at the ever-growing landscape of products and companies in the space.

Framework #2 to deconstruct the AI landscape

The AI landscape is in continuous change and as a result, most frameworks get outdated quickly. Similar to my “Deconstructing the AI Landscape” post from early 2020, the idea of this post is to share a timeless framework that will at its core hopefully still apply for a longer period of time. While there exist multiple nuances to the AI landscape, it can be easily depicted in four layers (that ofc can again be split into more sub-segments).

Logically, it’s most useful to look at this framework chronologically based on the order of how the different layers evolved.

The infrastructure layer includes the full value chain related to compute. It starts with players like Dutch ASML (€ 270 BN market cap, more than Mercedes, BMW, and VW altogether) who provide high-precision machines to companies like TSMC (€ 480 BN market cap) who manufacture semiconductor chips and provide it to NVIDIA (€ 1 TN market cap) who put them together into high-performance graphics processing units (GPUs). Moreover, this layer includes the big tech hyper scalers like AWS or Google Cloud who buy the GPUs, put them together into large-scale compute centers, and offer compute capacity to their customers. This layer is the most established part of the AI landscape as it has been evolving for decades, yet it’s one of the biggest bottlenecks for AI due to supply chain limitations.

The intelligence/foundation layer includes everything from data collection over model research and training to inferencing. Once all relevant public and/or private data got collected and models got properly trained, they can be provided to the outside world via APIs. The interaction with these model APIs works via prompting, a term that describes any form of text, question, information, or coding that communicates what response you're looking for. In return, the model generates the conclusions from the relations in the training data and returns a response. Most components of this layer evolved in the aftermath of the breakthrough paper “Attention is all you need” in 2017.

The application layer encompasses a range of solutions tailored to specific use cases and verticals. These solutions are offered by a) established incumbents, such as Microsoft, Adobe, and Notion, who have recently incorporated LLMs into their offerings. Additionally, there are b) AI-native solutions like Jasper, CopyAI, and Runway that fundamentally reimagine how AI can solve specific problems at their core. The availability of pre-trained LLMs via APIs from intelligence layer companies facilitated the development of this layer. However, the integration and effective utilization of these models into products have posed challenges, impeding widespread adoption in the application layer.

This is why the most recent middle layer naturally evolved. Solutions in the middle layer are mostly developer tools that seek to reduce the friction between the intelligence layer and the application layer. It includes companies that improve prompt capabilities (like Langchain or Dust.tt) but also vector databases (type of database that stores data as high-dimensional vectors (=embeddings) and allows efficient similarity searches) and data labeling companies.

Both frameworks are very simple, but in a combination of getting the terminology right and having a clear understanding of the AI landscape, you will be well-positioned for your next conversation on AI.

Stay driven,

Andre

PS: If you want to learn more about this topic, check out our previous post on “Value accrual in the modern AI stack”

PPS: Check out my recent podcast on the future of VC and how we leverage data & AI to become more efficient, effective, and inclusive.

Thank you for reading. If you liked it, share it with your friends, colleagues, and everyone interested in data-driven innovation. Subscribe below and follow me on LinkedIn or Twitter to never miss data-driven VC updates again.

What do you think about my weekly Newsletter? Love it | It's great | Good | Okay-ish | Stop it

If you have any suggestions, want me to feature an article, research, your tech stack or list a job, hit me up! I would love to include it in my next edition😎

thanks for clearing out the terminology.

EagleEye sounds great. Can it narrow down and match to your investment thesis automatically? And even evaluate the targets?